A live event on a linear TV channel is a type of event that most often describes video coming from an event that is actually happening in real time. It can be e.g. a news program, a sports event, a sermon, or a reality show. Contrary to popular misconception, it is possible (and quite easy!) to playback these live events in a cloud-based playout environment.

In this article, we will look at live signal transport options, live event types and various triggering solutions, typical issues, ways to deal with latency, and handling of multiple live events.1.

Cloud playout workflows usually require broadcasters to “get” their content (the actual media files - videos, audios, subtitles, etc.) into the cloud storage. This “getting to cloud storage” process is called “media ingest”, in which files are transported using various industry-standard data/file transfer protocols, either commercial UDP/TCP based products (Aspera, Signiant, File Catalyst, etc.) or Open Source (FTP, SFTP, HTTPS from S3, etc.).

Of course, Live signal cannot be uploaded as a file and has to be streamed directly to the playout(-s). There are multiple ways to transport these live signals (feeds) to the cloud. Although Open Source protocols/methods like RTMP or HLS can be used, those are not ideal as they offer just some protection against packet loss and other network-related issues, no latency controls, and are not suited for switching between main/backup feeds (if such exist) at the receiving end.

In this chapter we will look at two alternative protocols - ZiXi and SRT - which are both suitable for professional broadcast workflows (including live signal/feed transport), and describe various usage scenarios.

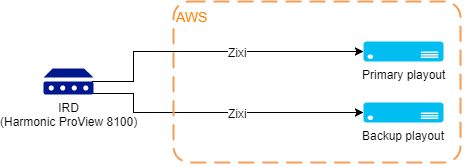

This is a simple scenario for broadcasters originating live from a single point and playing it into a single TV channel. Live feed is originated from, perhaps, a studio switcher, a satellite receiver or some other IP-based broadcast infrastructure device. The signal is sent through SDI or LAN to a ZiXi-compatible IRD (either an industry standard device or a generic Windows/Linux-based server with Zixi Feeder software installed). This IRD then pushes the Zixi feed to both primary and backup playouts in the cloud. This setup is appropriate where there is a single TV channel and a single point of origin for the live signal.

A similar setup can be achieved by using SRT. In this case, the IRD can be Haivision Makito, T21 T9261-E, Wowza Streaming Engine running on a generic Windows/Linux/Mac server, or some other SRT enabled device/software.

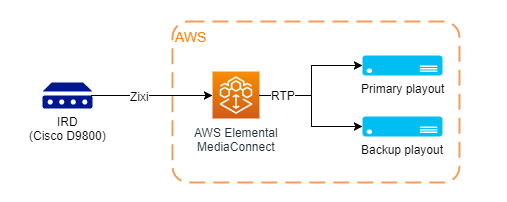

Since December 2018 it is possible to transport broadcast-grade IP signals/feeds using AWS Elemental MediaConnect service. This service has RTMP and Zixi support, provides redundancy, monitoring, and one-to-many routing capabilities. When compared to the previous setup example, only one signal/feed is sent from the live signal’s point of origination (studio, etc.). MediaConnect is a robust and cost-effective option if no advanced routing or live signal previewing is required.

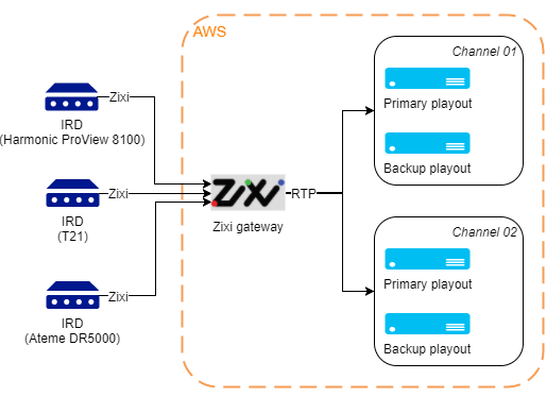

In usage scenarios with multiple live origins (multiple studios/external/remote locations etc.) and with multiple channels (and playouts), it is often not viable - both technically and commercially - to send all live feeds directly to all playouts. A more elegant approach is to deploy a software-based cloud gateway server. This would allow to monitor and analyze the incoming feeds, do routing and switching as necessary, and potentially do recordings of the incoming live feeds, either for a simple live repeat workflow or even for QA purposes.

If specifically ZiXi is used, you can use Zixi Broadcaster software in an active-active cluster, or deploy ZiXi Zen for additional fault tolerance, scaling automation and just better management altogether. Zixi Broadcaster can also be employed to do time shifting for incoming live feeds - if, say, you need the live event to begin at exactly 8 PM over multiple separate time zones, you would be able to record & delay the live feeds before they are pushed to playouts. And, of course, from the administrator’s standpoint, it is usually better to consolidate the switching and routing in a gateway rather than manage all endpoints separately.

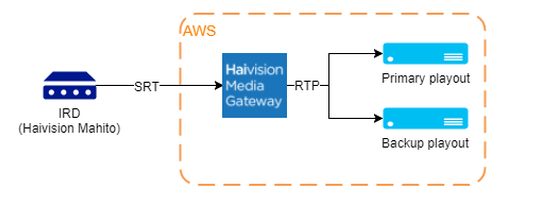

Haivision provides an on-demand (PAYG - pay as you go) product in AWS Marketplace that’s called Haivision Media Gateway (HMG). HMG can be used as a router and splitter for SRT feeds, and it can also flip SRT to RTP/UDP and vice versa. HMG doesn’t currently provide monitoring and time-shifting features, and it’s also not automatically scalable compared to Zixi Zen. Still, it is a good solution if SRT is the transport protocol of choice. HMG business model is bandwidth based, i.e., you purchase 100 Mbit/s throughput. If you need more than that, you can purchase another 100 Mbit/s package, etc. Because of this relatively high initial throughput requirement using HMG for one or two feeds will usually be on the lower side of the cost-effectiveness scale.

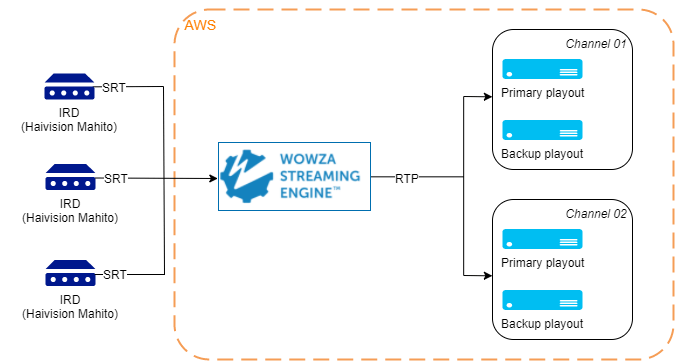

Alternatively, Wowza Streaming Engine can be used as a one-to-many - or in this case - many-to-one SRT transport protocol gateway. Wowza SE can be run in a cluster, it will ingest SRT feeds and output RTP feeds. Wowza is priced conveniently per instance so you can send many feeds through one instance for the same monthly cost. There is also a SaaS service product offered by the same company (Wowza), named Wowza Streaming Cloud. While this service has added benefits like redundancy and automated provisioning, in my view it is more cost efficient to run your own Wowza cluster(-s) in AWS or any other public/private cloud of your choice, and scale it as necessary. Wowza SE is a cost-effective solution if SRT is the transport protocol of choice.

In general - live events can be of various types, depending on how they are started and finished. Based on those characteristics there can be various challenges and solutions in terms of switching to and from those events and - also - various ways to handle feed latency.

These events have a previously set (in schedules/playlists) start/end times. A typical example for a fully scheduled live event type is a regularly planned live show from a studio which begins, let’s say, at 4 PM and ends at 5 PM. To have a well-timed switching to and from these events, one needs to pre-configure the latency of the live signal from studio to the playouts. It would usually be measured by using various specialized countdown videos and fixed on the transport layer using static buffer values (both Zixi and SRT allow you to do that).

If you have advertisement (ad) breaks during the live events, then - again - it depends whether the breaks are fully scheduled or not. E.g., if there are exactly two breaks, two minutes each, first one starting 20 minutes into the show’s running time, and the second starting 40 minutes into the show’s running time, then, in terms of playout, it would be easy to train the studio employees to manually go in/out of ad breaks as needed.

If the breaks are unscheduled then you would need to use a different method to trigger the ad breaks. A frequently used, popular method is to insert SCTE-35 cues into the live feed and configure the playouts to listen for them and go into ad breaks upon receiving the appropriate cues.

If SCTE-35 insertion in the live signal/feed is not available, then, well, another alternative method is to use web-based REST API or HTTP/S web calls to the cloud playouts. The studio operator manually marks the start of the break by pressing a special button in a web-based UI. This “button” captures the timecode of when it was pressed. At the same time, playout is configured to respect the static latency of the live signal (e.g., 500ms, or 5 seconds - this latency is measured on playout-studio link/transport setup) and will wait and start the ad break after the configured appropriate latency amount (time) has passed. The same REST API call can be used for multiple channels, providing a uniform ad break workflow for all channels. Because of the intrinsic online nature of the cloud playout, the API calls can be done from anywhere. This is a simple and straightforward method to manage breaks in a situation where there is one live studio and it’s easy to train the studio employees. Veset Nimbus supports both SCTE-35 and REST API methods.

Live events can be started and finished manually in the cloud playout. It is possible to do a manual live override of the timeline where the playout operator switches to live signal/feed, while the actual scheduled timeline continues going forward. When the manual override is finished, the playout automatically returns to playing out the scheduled timeline, playback skips ahead to the date/time it should have been playing if there had been no manual operator intervention.

It is also possible to begin and end live signal playback using TAKE button in Nimbus web UI. In this case, the live event has to be previously scheduled but the start and end time is chosen manually by the channel operator. You can also use the “Wait for operator” option for the scheduled live event which will make it so that the timeline shifts automatically based on the precise moment the TAKE command is executed and the eventual duration of the live event. Of course, usually, this means that the playout operator will need to correct the actual timeline of the channel to compensate for any gaps/overlaps that resulted from the manual TAKE operation by the operator.

Some live events might be partially scheduled “by nature”. For example, a sports event would typically have a set start time and a variable duration and end time. In this case, you would use the “Wait for operator” option for the live event and possibly also lock the next significant event in the timeline to see what the resultant gap is. Again, the live event can be ended by the operator manually in the playout’s web UI, or by using the previously mentioned SCTE-35 cues or even REST API calls.

One of the most often asked questions is about the latency in cloud playout. The latency of a system is the delay between the instant a sample enters and the instant it leaves the system. In a TV broadcast system, the delay is respectively between the first pixel of a video frame entering the transmitter through the video input and the first pixel of the same video frame going out of the receiver on the video output. While in on-premise SDI-based workflows latency is considered to be non-existent or negligible, in a system transmitting compressed video from studio to the cloud playout, latency will be at least 500 milliseconds depending on the network connection latency in-between. Traditional broadcasters often stress the need to achieve zero latency, but the world has changed, - most TV channel viewers use mobile devices streaming feeds from OTT networks. Various streaming protocols used, like HLS/DASH or even RTMP, introduce a substantial amount of extra latency - starting with a few seconds usually, and ending in minutes. Of course, the introduction of signal compression/encoding in the signal processing/delivery chain also introduces some amount of added latency, but this part is usually negligible.

In a cloud playout workflow a typical/expected live signal latency (studio-to-playout) would usually start with about 4 seconds. It should be fixed using static buffers, measured and respected. In terms of switching, we mentioned several methods in the previous chapters. Our view is that the SCTE-35 based to/from live signal switching is the most precise one available for the cloud-based workflows, seconded by virtual REST API web based requests.

It is possible and quite straight-forward to play live events in a linear TV channel using cloud-based playouts. Live shows from a studio, live sports events, live productions from off-site locations can be transported and played out reliably, and there are multiple broadcasters who are doing this for several years already. There are also several methods of how to transport, route, switch and monitor the live signals/feeds. Besides Tier 1 level uncompressed IP type transport, there are two major protocols/technologies used today - Zixi and SRT. We see more and more hardware and public cloud providers building products with built-in support for these transport technologies, and it shows that the broadcast industry is moving towards a fully cloud-based broadcasting.